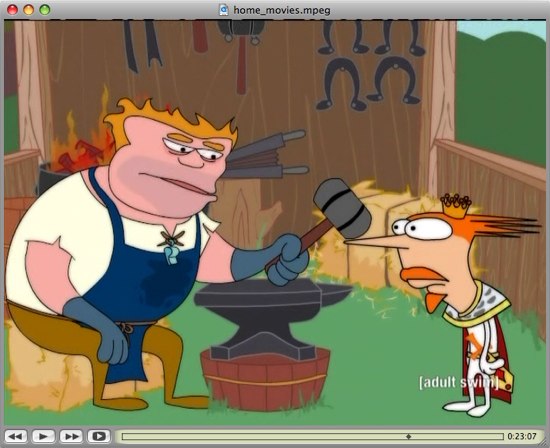

Not bad, right? And that’s resized and compressed to a lossy jpeg. Take my word for it: the original looks fantastic.

It turns out that tivodecode is near-miraculous (the GUIs for it… less so). If you’ve got a recent-model Tivo you can visit its https interface, download the stored .tivo files and convert them to mpeg, easy as you please. Of course, the files are pretty big — several gigs for HD recordings — and I haven’t currently got a machine on my network beefy and idle enough to transcode up iphone-ready files on a regular basis.

Still: pretty neat. It’s nice to know that I’ve got a very high quality capture box sitting under my TV if I ever decide I badly need to put some televised video online. And it’s just fun. Do any progressive media watchdogs want to pay me to help them create automated, annotated archives of cable news? Because with this gadgetry we could totally do it on the cheap.

Segmenting and annotating comes from the closed captioning, because that gives you text to recognize and index by. We were going to do this a few years ago, as a byproduct of a system we built for archiving Predator video (segmented and annotated from the telemetry). It would even have been legal because Ted Turner explicitly gave blanket copyright permission for DoD use of CNN (and no-one in Time-Warner’s ever withdrawn it). But there wasn’t really much interest. So we never got past a prototype. There are real problems in scaling up. You get into VLDB territory quite quickly. And there are still the copyright issues.

Yeah, that t2sami tool looks promising. I’d be interested in extracting the CC text alone to create a searchable database.

The data storage requirements would be significant, of course, but for a media organization keeping things roughly on the news cycle would be fine. You could transcode down to flash-ish video quality, then just stick the last few months’ worth of video on S3. That would be relatively affordable.